Samsung embeds AI into high-bandwidth memory to beat up on DRAM

4.5 (338) · € 18.99 · En Stock

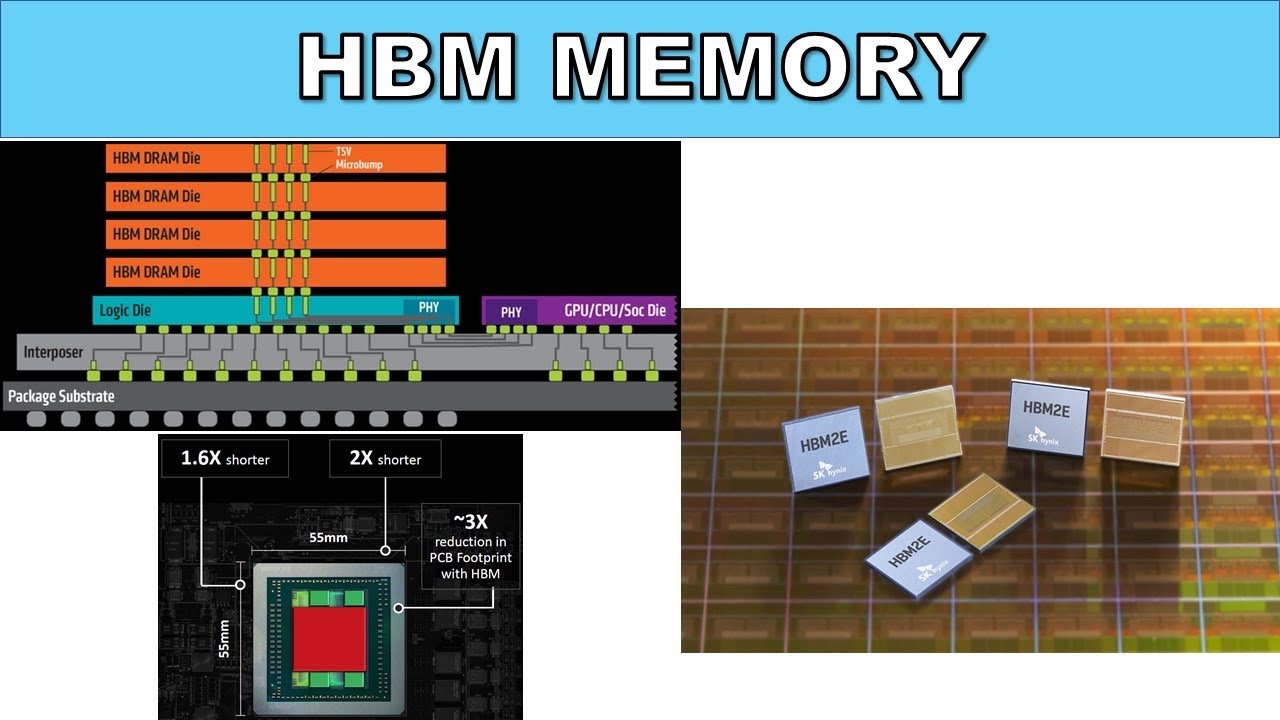

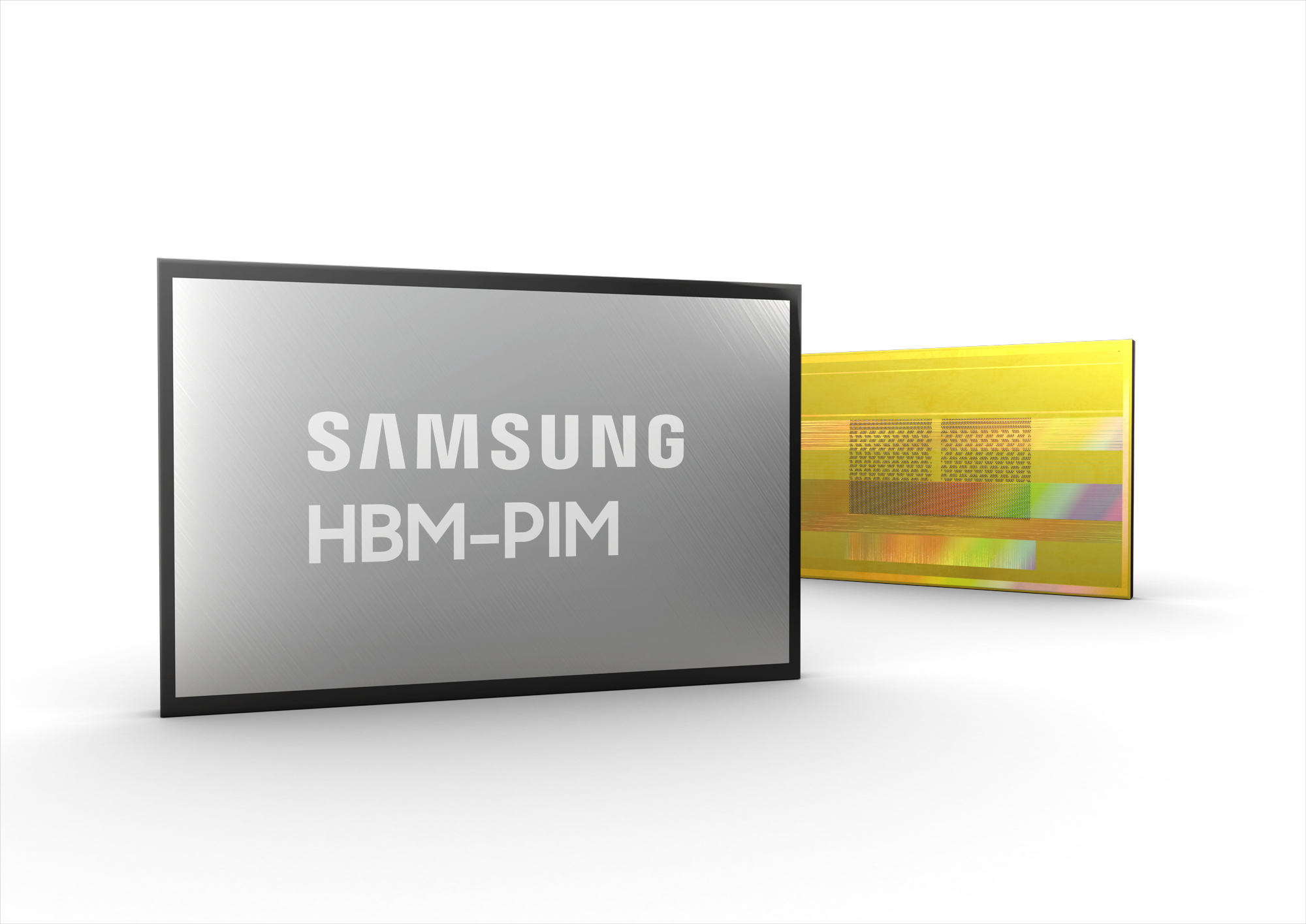

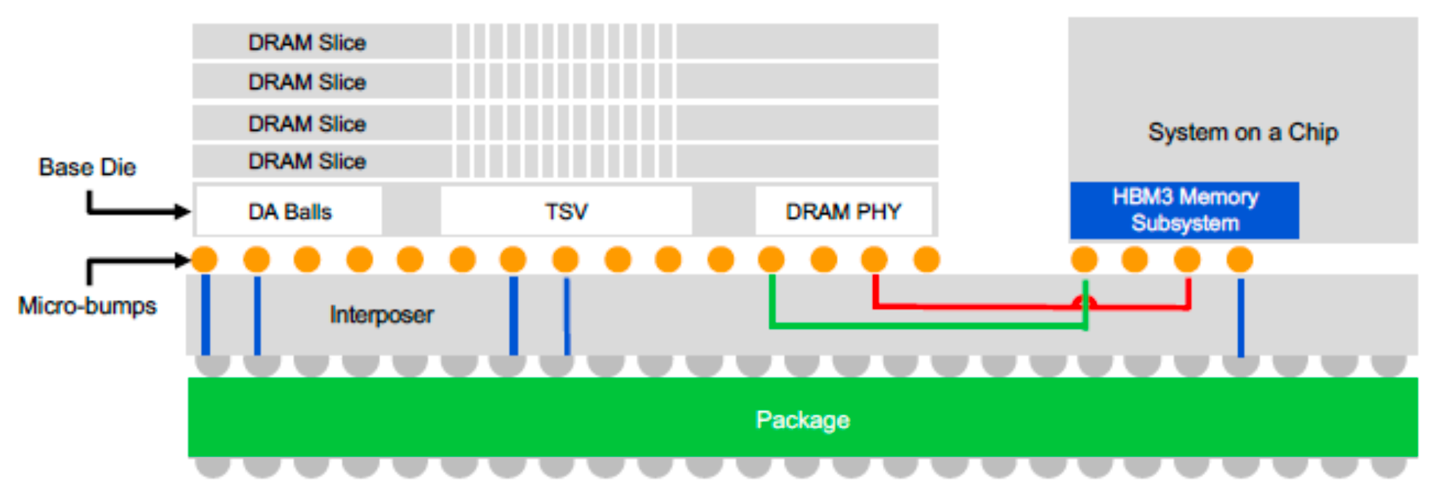

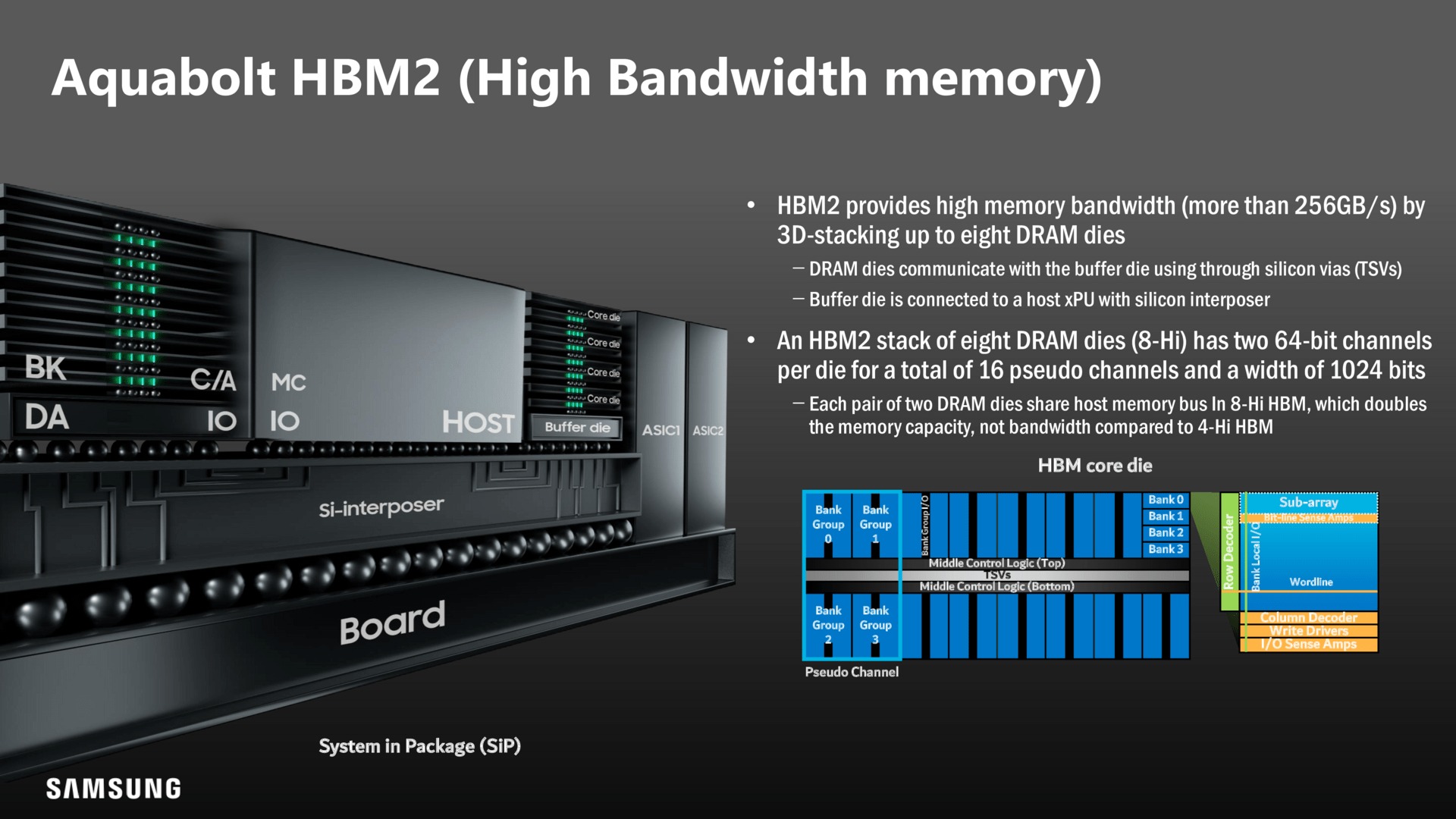

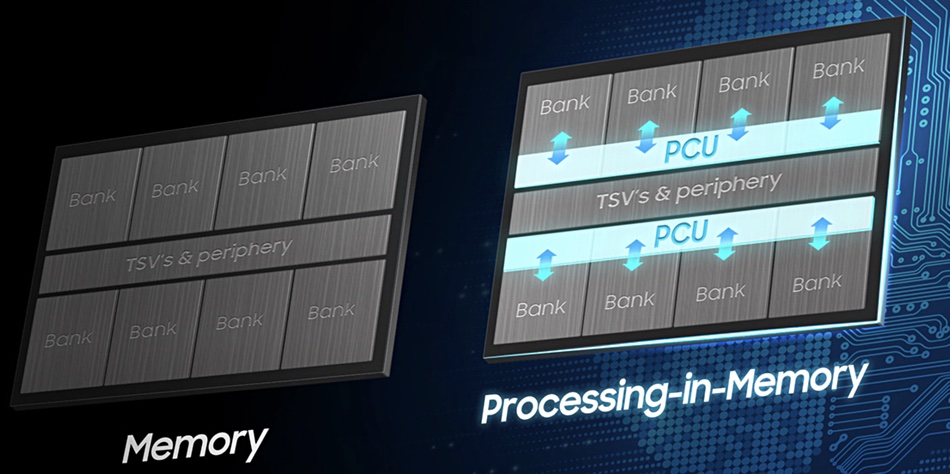

Samsung has announced a high bandwidth memory (HBM) chip with embedded AI that is designed to accelerate compute performance for high performance computing and large data centres. The AI technology is called PIM – short for ‘processing-in-memory’. Samsung’s HBM-PIM design delivers faster AI data processing, as data does not have to move to the main […]

SK Hynix Envisions 600-Layer 3D NAND & EUV-Based DRAM

Samsung announces first successful HBM-PIM integration with Xilinx Alveo AI accelerator

How designers are taking on AI's memory bottleneck

Samsung adds an AI processor to its High-Bandwidth memory to ease bottlenecks

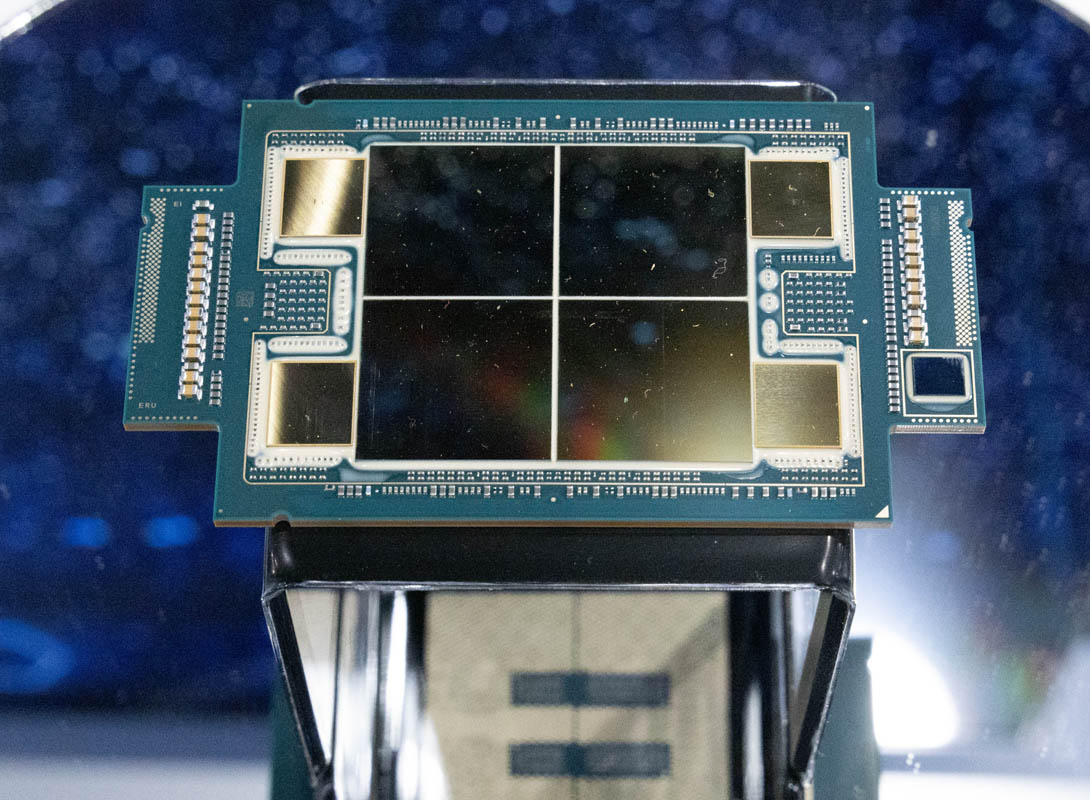

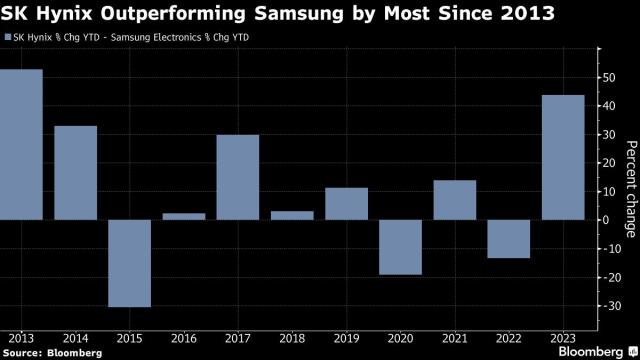

Investors Bet Samsung's Smaller Memory Chip Rival SK Hynix Will Be an AI Winner

Samsung embeds AI into high-bandwidth memory to beat up on DRAM – Blocks and Files

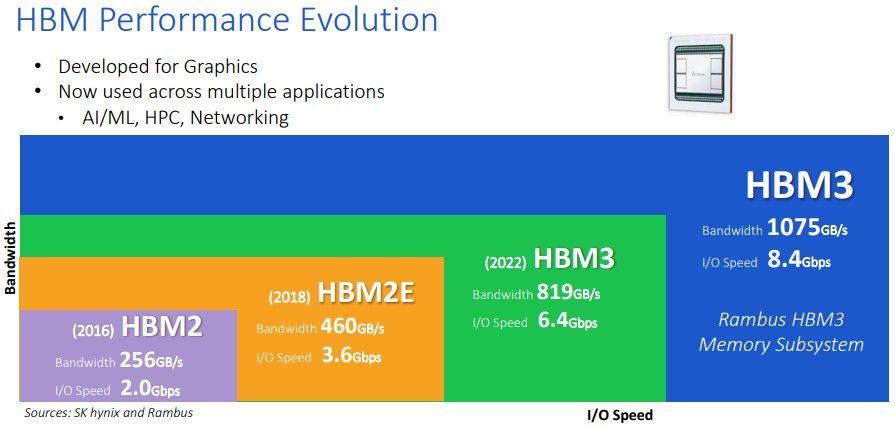

AI demand drives expanded high-bandwidth memory usage

AI expands HBM footprint – Avery Design Systems

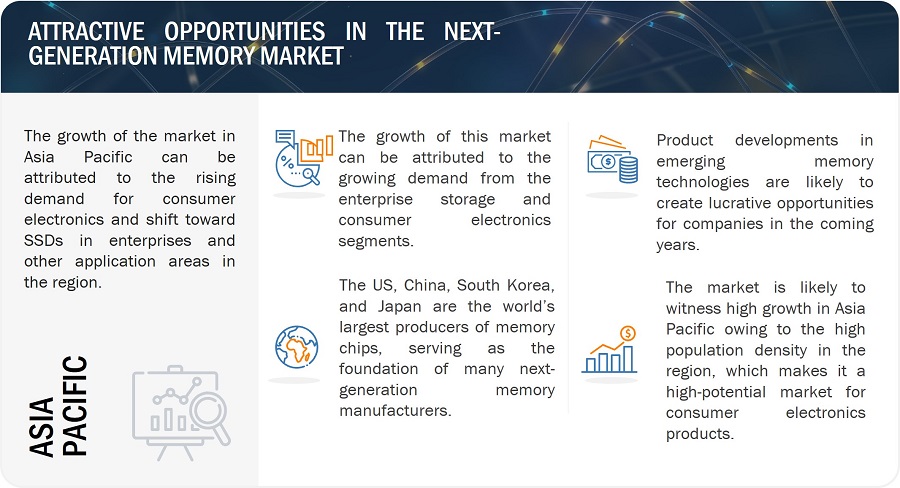

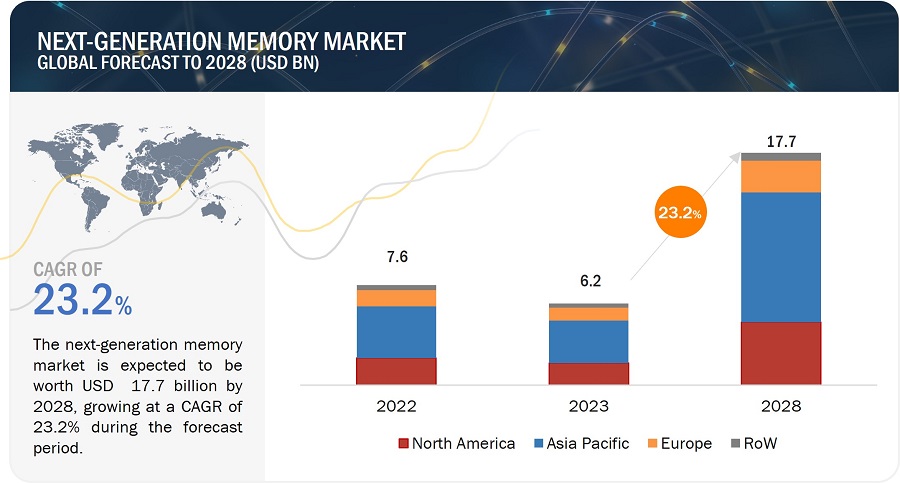

Next-Generation Memory Market Size, Share, Industry Report, Revenue Trends and Growth Drivers - Forecast to 2030

Samsung to launch low-power, high-speed AI chips in 2024 - KED Global

SK Hynix and Samsung's early bet on AI memory chips pays off

Next-Generation Memory Market Size, Share, Industry Report, Revenue Trends and Growth Drivers - Forecast to 2030